- Infrastructure

- Resource Configuration

Infrastructure

Resource Configuration

Configure your Chalk Kubernetes cluster

Overview

The Settings page of the dashboard exposes many useful configurations that you can use to

tune your Chalk environments appropriately to handle your specific workload and latency requirements.

Kubernetes

Under Settings > Kubernetes, you can view all the pods in your Kubernetes cluster under the namespace

for your environment, in addition to their phase and creation timestamp. By clicking on the pod name,

you can also view the Kubernetes Pod View. This will include data about the pod, such as the resource

request, IP address, and resource group, as well as data about the node on which the pod is scheduled.

In the Pod View, you can also see the logs for the pod, which can be useful for debugging errors on

branch deployments or job pods.

Nodepools

Under Settings > Nodepools, you can view and configure the nodepools

used to run your Chalk environment. A NodePool defines constraints on the Kubernetes nodes that can be

scheduled in your Kubernetes cluster. Notably, you can select the machine families that you would like

to use in your deployment, as different machine families are optimized for different workloads. You can

also set a CPU limit on your NodePool. This page will allow you to provision the resources and machine

families that are best suited for your workload and usage requirements.

When configuring a nodepool, you’ll notice two checkboxes, “Isolate this nodepool” and “Restrict to Chalk workloads only”.

“Isolate this nodepool” being set means that workloads cannot

be scheduled in the nodepool unless they are specifically configured to do so under

Settings > Resources, which can be useful to minimize interference or reserve certain instance

types for specific workloads. This causes the nodepool to have the Isolated label.

“Restrict to Chalk workloads only” being set means that unrelated non-Chalk workloads in the cluster

will not be allowed to schedule on this nodepool, which can be useful for environments deployed into pre-existing

clusters that also run unrelated workloads. This ensures that those non-Chalk workloads do not inadvertently consume

Chalk credits. This causes the nodepool to have the Restricted label.

You may also notice that the nodepools you create through the Chalk dashboard automatically have the Chalk label.

This indicates that the nodepool makes Chalk-managed nodes, whose uptime contributes to credit usage. All nodepools

created through the Chalk dashboard automatically have this label, although the dashboard will also display nodepools

without it. Chalk workloads are only allowed to schedule on nodes from nodepools with the Chalk label.

More details about these labels can be found in the Billing Documentation.

Once you have configured your Nodepools, under Settings > Resources, you can select the Nodepool

to use for each service in each resource group in your environment, as well as the Pod Disruption

Budget.

Resources

Under Settings > Resources, you will find the Cloud Resource Configuration page, which enables you

to set the default resource requests, autoscaling, and limits for each service in each resource group

in your Chalk environment.

All Chalk environments will have a Default resource group. If you have a specific use case which would

require more resource groups, please reach out to the Chalk Team.

In a resource group, you can specify the default resource requests, pod instances, instance types, and

nodepools for each service. The resource configurations for the Query Server, gRPC Query Server, and the

Branch Server will be used to spin up pods during deployments. The Job Queue Server configuration

specifies a pool of workers that are used to process asynchronous offline query jobs, scheduled queries,

and aggregation backfills. The worker pool will automatically scale up and down based on the number of jobs

in the queue to process. The resource requests for asynchronous offline queries can be overridden in the

resource_request parameter of the ChalkClient.offline_query() method, and the resource requests for

Scheduled Queries can be overridden in the dashboard in the Scheduled Query Configuration tab for the job.

In order to isolate resources for different services, you can select Isolate from other Chalk services,

which will ensure that pods for that service are only scheduled on nodes provisioned for that service.

For example, if you serve production traffic against your gRPC query servers, you can isolate the resources

such that Karpenter consolidation of other pods for offline jobs or the branch server will not affect

your gRPC query server pods. For resource optimization, the Instance Type selector enables you to specify

the machine family you would like to use for your

deployment. For example, this would map to EC2 Instance Types

for AWS deployments or Compute Engine Machine Families

for GCP deployments. By default, the Ephemeral Storage for a service will be set to the same as the

Memory, but if you are deploying larger files, you can specify the Ephemeral Storage separately.

How are Pods and Nodes Provisioned?

In the Settings page of the dashboard, you can configure the nodepools and resource configurations that are

used in combination to provision resources in your cluster.

Whenever there is a new pod that needs to be spun up, triggered either by a manual trigger, cron schedule, or

autoscaling, then based on the Resource Configurations set for the service for the pod being spun up, we will

create a pod with resource requests, node selectors, affinities, and anti-affinities. The Kubernetes Scheduler

will then attempt to place the pod on an existing node. If it cannot find a node that satisfies the pod’s

configurations, then Karpenter will analyze the pod, as well as the other nodes in the cluster and provision

or deprovision nodes such that the new pod can be scheduled.

For AWS-hosted clusters, we use Karpenter Nodepools and for GCP-hosted clusters we use NAP. Both Karpenter and GKE NAP will attempt to optimize resource provisioning based on the provided nodepool constraints. You can manage these nodepool constraints in a few ways:

- Edit your existing nodepools: Under

Settings > Nodepools, you can edit the nodepool constraints to restrict or widen the nodes that Karpenter can produce. - Create new nodepools: Under

Settings > Nodepools, you can create new nodepools with more restrictive node constraints, marking them as “isolated” to prevent services from scheduling on them, and configure one or more services to run only on those nodepools. - Specify Nodes in Service Requests: Under

Settings > Resources, you can make services request specific kinds of machines under theService Isolationpane.

While customers on GCP and AWS can both create nodepools through the Nodepools tab in the dashboard, the nodepools that you can create differ. Typically, you would define Karpenter nodepools to provision a range of nodes, whereas NAP in GCP would create nodepools in response to pods that need to be scheduled, where each nodepool can create exactly one kind of node that can scale by the number of node instances. Chalk enables customers to create nodepools regardless of cloud provider, however customers on GCP would have to choose between allowing NAP to make its own decisions about the types of nodes to provision or to define your own set of nodepools to force services to run on specific instance types.

When choosing how to constrain pod resource requests and nodepools, it is possible to request more resources than an instance type has available. The Chalk dashboard provides warnings when the resources requested are impossible to schedule on any available nodes, but it can be difficult to be estimate the amount of overhead on each Kubernetes node. Typically, selecting a resource request that aims for 75% of stated node capacity should be reasonable.

Autoscaling

Chalk supports autoscaling of resources in your environment in order to optimize resource usage.

For the branch server, you can set the Auto Shutdown Period, which will determine the duration of

inactivity (no queries or deploys) after which the branch server pod will be automatically shut down.

For the Query Server and gRPC Query Server which are used to serve your production traffic, you can configure KEDA-based autoscaling. Autoscaling for either of these resources will be constrained depending on the Nodepool configurations for your environment. Auto-scaling can be triggered based on CPU utilization or on a schedule.

CPU-Based Autoscaling

To enable CPU-based autoscaling, you can set the Target CPU % in the Cloud Resource Configuration.

Chalk will then monitor the CPU utilization of the service and scale the number of instances up or down

based on the target CPU percentage. In order for CPU-based autoscaling to work, you must have the

Min Instances, Max Instances, and Target CPU% all set for the service.

Scheduled Autoscaling

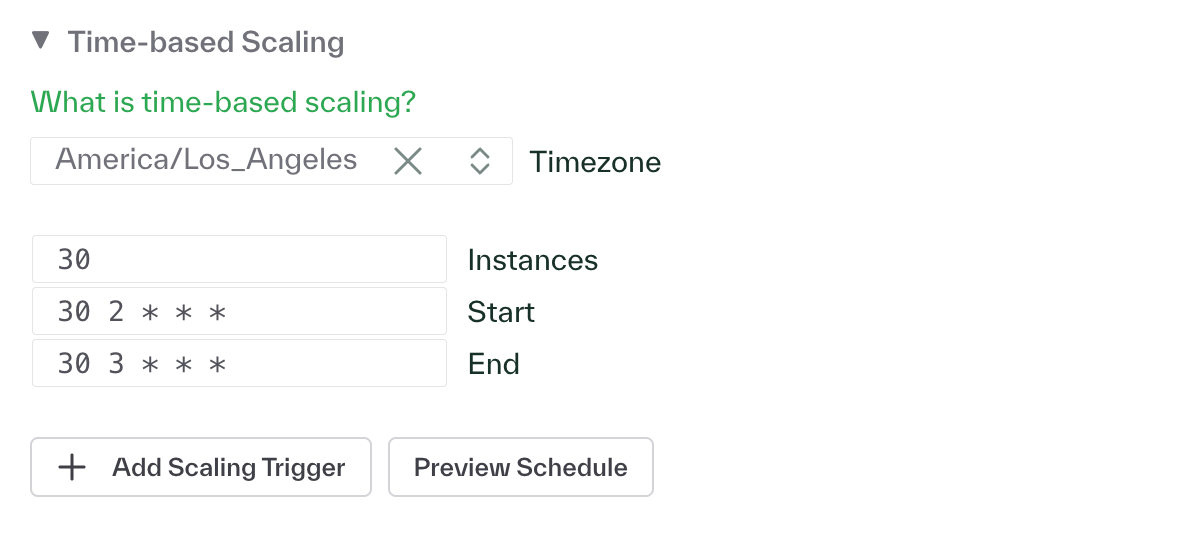

To enable scheduled autoscaling, you can define Scaling Trigger’s for each service configuration in the

Cloud Resource Configuration page. For each service in a resource group that you would like to autoscale,

define the Min Instances and Max Instances under Scaling. Then, under the ADVANCED section, under

Time-based Scaling, you can define one or more Scaling Triggers. For each Scaling Trigger, you can define

the desired number of replicas, the start time, the end time, and the timezone for the schedule. The start

time and end time are defined using standard cron syntax.

Shared Resources

Under Settings > Shared Resources, environment admins can view and manage shared resources across

different services in your environment, including the Metrics Database configuration, Gateway

configuration, and Background Persistence configurations. You can adjust these configurations here

if, for example, you need to scale up your Metrics Database to handle larger volumes of data, or

if you drastically increase the volume of data being written to the online or offline stores.

For enabling autoscaling of Background Persistence workers to handle variations in data being persisted online and offline, you can set the Horizontal Pod Autoscaling (HPA) Settings in the Background Persistence Configuration tab. In order to enable autoscaling, it is required to set

- Max replicas

- Min replicas

- Target average value (the approximate target undelivered message count in queue per replica)

- Pubsub Subscription ID

Connections

Under Settings > Connections, you can view all the connections that your environment has to external

resources, such as your online and offline stores, and the branch server. This is a good place to check

the health of the different services in your environment, as well as to verify the types of connections

configured.

Environment Variables

Under Settings > Environment > Variables, you can view and edit global environment variables for

your environment. Please reach out to the Chalk team if you have any questions about which environment variables

to set for your use case.

User Permissions and RBAC

Under Settings > User Permissions, you can view the roles associated with each user, as well as whether those

roles are granted directly or via SCIM. When adding new users to your Chalk environment, you can assign

them roles that will determine their permissions in the environment. The available roles in order of

increasing permissions are:

- Viewer: Read the web portal and create new alerts.

- Data Scientist: Run queries, branch deploy, + everything that a Viewer can do.

- Developer: Run queries, run migrations + everything that a Data Scientist can do.

- Admin: Create deployments, service tokens, and secrets + everything that a Developer can do.

- Owner: Manage team members + everything that an Admin can do.

Customers with Enterprise Features can also configure datasource and feature-level RBAC (Role Based Access Control).

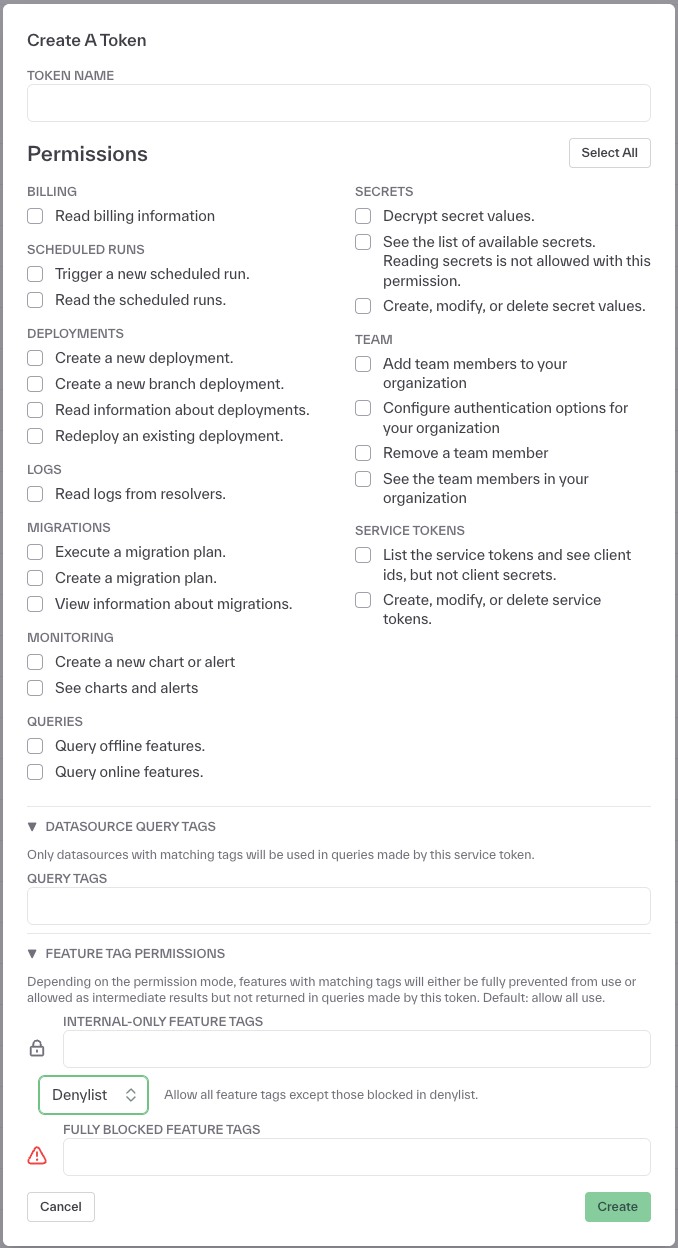

Under Settings > Access Tokens, you can create and manage service tokens that can be used for RBAC.

On the datasource level, you can restrict a token to only access data sources with matching tags to resolve features.

On the feature level, you can restrict a token’s access to tagged features either by

blocking the token from returning tagged features in any queries but allowing the feature values to be

used in the computation of other features, or by blocking the token from accessing tagged features entirely.

Denylisting vs Allowlisting Tags

When configuring feature-level RBAC, you can use either denylisting or allowlisting approaches to control access to tagged features:

Denylist: Users with a denylist configuration will be allowed to query all feature tags except those specifically blocked in the denylist. This is a permissive approach where access is granted by default, and only explicitly listed tags are restricted.

Allowlist: Users with an allowlist configuration will be denied access to query all feature tags except those specifically specified in the allowlist. This is a restrictive approach where access is denied by default, and only explicitly listed tags are permitted.